Kindly fill up the following to try out our sandbox experience. We will get back to you at the earliest.

Open Source Data Observability: Pros, Cons, Alternatives

Explore the choices between open source and vendor-managed data observability in this comprehensive guide.

Every business understands the importance of collecting data, but having a vast data set is not enough. You need to cut the noise to filter meaningful data that can help you fuel market campaigns, enhance your productivity, and increase your overall efficiency. This is where data observability comes into play.

You need a powerful way to effectively monitor, analyze, and optimize data pipelines to gain competitive advantage and drive innovation. Data observability is a set of practices that helps you understand your data health. There are two approaches to data observability – open-source data observability and vendor-managed data observability.

In this post, we'll discuss both in detail so you know which one to use and when.

What is Data Observability?

Data observability includes continuously monitoring and understanding the health of your data throughout its lifecycle. It involves everything from data sources and pipelines to the transformation processes and the final destination where the data is used.

With data observability, you get an idea about which data is wrong, what data is not working, and how you can fix it.

The observability market is forecasted to reach $2B by 2026, growing from $278M in 2022.

Why Is Data Observability Crucial for Businesses?

Data observability has a lot to offer to businesses. Let's take a look at the several reasons that make it crucial for businesses:

- Data observability has a great role to play in improving the quality of data. Did you know that bad data costs organizations in the US around $3 trillion annually? Now, that is huge, and you won't want that for your organization.

- Practicing data observability lets you address any concern related to data in the early stage itself. This way, you can save your organization from taking any misinformed decision based on wrong data.

- Data observability can easily help you fix issues in complex data scenarios. You can understand situations and their impact on your business. Root cause analyses help you reduce the chances of data downtime.

- Data observability is also great for cost-effectiveness. You can eliminate redundant data and misconfiguration for better resource utilization.

What Is Open-Source Data Observability?

Open-source data observability tools come with all the features that you need to monitor, analyze, and maintain the quality and performance of the data you have. These tools can also be integrated with other tools and can create tailored data quality rules.

And the extensive community of developers make it great for beginners.

Here's a list of the common open-source tools used for data observability:

- Great Expectations: Great Expectations is an open-source data validation tool that focuses on data testing, validation and documentation. The tool is packed with pre-defined tests and supports custom tests to check data systems on a regular frequency. Due to its community-driven approach, it's continuously evolving. Supports Python and SQL language.

- Soda: Supports data quality, schema drift and also custom tests. The support for YAML makes it easier to define the tests. Data can be validated at multiple points in your CI/CD and data pipelines to prevent downstream data quality issues, ensuring that your colleagues have data products they can trust.

- Elementary Data: Elementary Data is an open-source data observability tool designed to provide comprehensive visibility into data pipelines and infrastructure. With features like real-time monitoring, anomaly detection, and customizable dashboards, users can ensure data quality, reliability, and compliance effortlessly.

- OpenTelemetry: This tool helps in implementing the data collection, processing, and publishing processes vendor-independently. This means you don't need to support and maintain various observability data formats, such as Jaeger and Prometheus.

- ELK Stack (Elasticsearch, Logstash, Kibana): This is a combination of open-source tools for centralized logging and log analysis. Elasticsearch is used for indexing and searching log data, Logstash for log ingestion and processing, and Kibana for visualization and analysis.

Pros and Cons of Open-Source Data Observability

Let's make it easy for you to understand open-source data observability better by delving into its pros and cons.

Pros

Cost-effectiveness: Open source solutions are typically free to use, which can significantly reduce the overall cost of implementing and maintaining data observability capabilities compared to proprietary solutions. And you can instead allocate your budgets toward other critical areas.

Flexibility and customization: Open-source software allows you to customize and extend the data observability stack according to specific requirements and use cases. You get full access to the source code to tailor the solution to your unique needs.

Community support and collaboration: Open source projects often have active communities of developers, users, and contributors who share knowledge, provide support, and collaborate on improving the software. This community-driven approach fosters innovation, rapid development, and continuous improvement of open-source data observability tools.

Transparency and security: The transparency of open-source software allows you to inspect the source code for security vulnerabilities, ensuring greater trust and confidence in the security of the data observability solution. You can also identify and address bugs and security flaws more quickly through community scrutiny.

Reduced vendor lock-in: Open-source solutions mitigate the risk of vendor lock-in since you have access to the source code and can switch vendors or platforms more easily. This freedom helps you maintain control over your data observability infrastructure and avoid dependence on a single vendor.

Cons

There are certain limitations of open-source data observability. Let's have a look:

Complexity and expertise: Implementing and managing open-source data observability solutions may need significant technical expertise and resources. You need skilled personnel who are proficient in deploying, configuring, and maintaining the various components of the observability stack.

Support and documentation: While open-source data observability frameworks often have extensive documentation and community support, organizations may encounter challenges in finding timely and reliable assistance for troubleshooting issues or addressing complex deployment scenarios. Paid support options are available but may incur additional costs.

Integration and compatibility: Integrating multiple open-source tools and components to build a comprehensive data observability solution can be complex and time-consuming. Ensuring compatibility and interoperability between different software versions and dependencies may require careful planning and testing.

Responsibility for maintenance and updates: Organizations are responsible for managing the maintenance, updates, and patches of their open-source data observability infrastructure. You need proactive monitoring and management to keep the software up-to-date with the latest security patches and feature enhancements.

Decube-Managed Data Observability

Decube is an innovative data observability platform meant to seamlessly integrate with an organization's ecosystems. Decube’s data observability is a practice to monitor and analyse the performance of the data and its health within an organization's infrastructure. Unlike open-source data observability, Decube is completely managed and secured with industry certification. It’s the only platform that brings data observability along with data contracts and data catalog features.

Key characteristics of Decube-managed data observability include

Discoverability (Data Catalog): Decube facilitates understanding of data location and format, with real-time change logging. This expands accessibility and knowledge of data systems, enabling effective and informed operations.

Domain Ownership (Data Mesh): Decube can allocate crucial data ownership to key stakeholders driving business objectives that, in return, promote accountable data management aligned with strategic business goals.

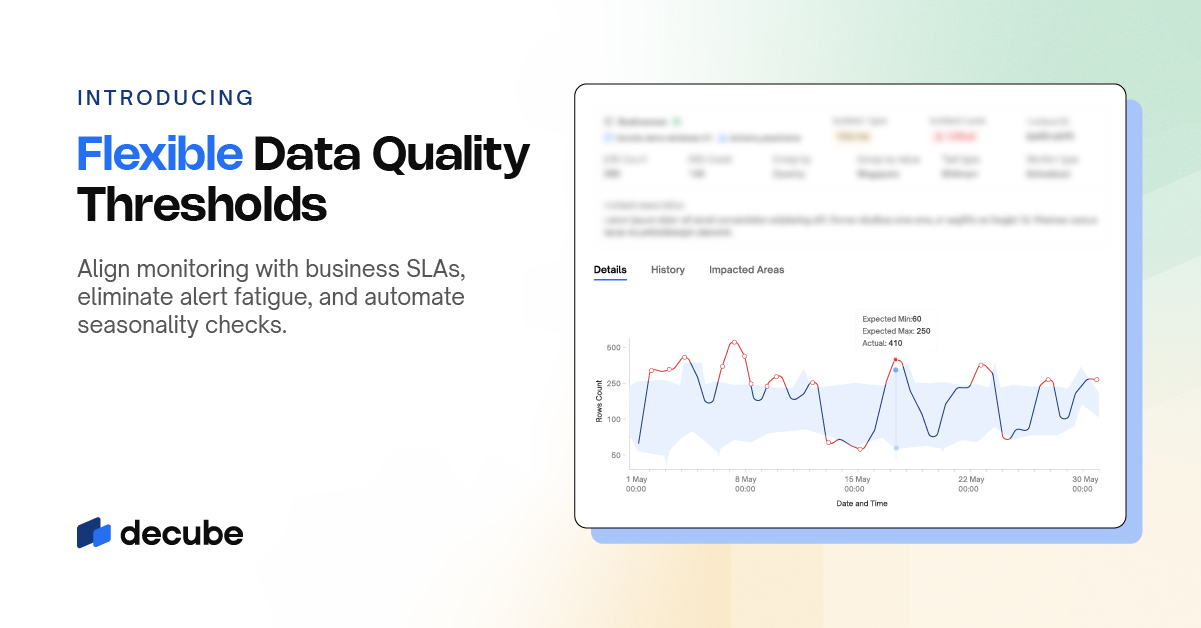

Data Reliability (Data Observability): With Decube, you can implement continuous monitoring through the Five Pillars of Data Observability: freshness, distribution, volume, schema, and lineage. Decube also ensures superior data quality, safeguarding operations from risks associated with low-quality data.

Governance: Decube offers controlled data access based on roles, ensuring regulatory compliance and sensitive information protection. It also secures critical data, upholding ethical and legal usage organization-wide, including masking of PII and other critical data elements.

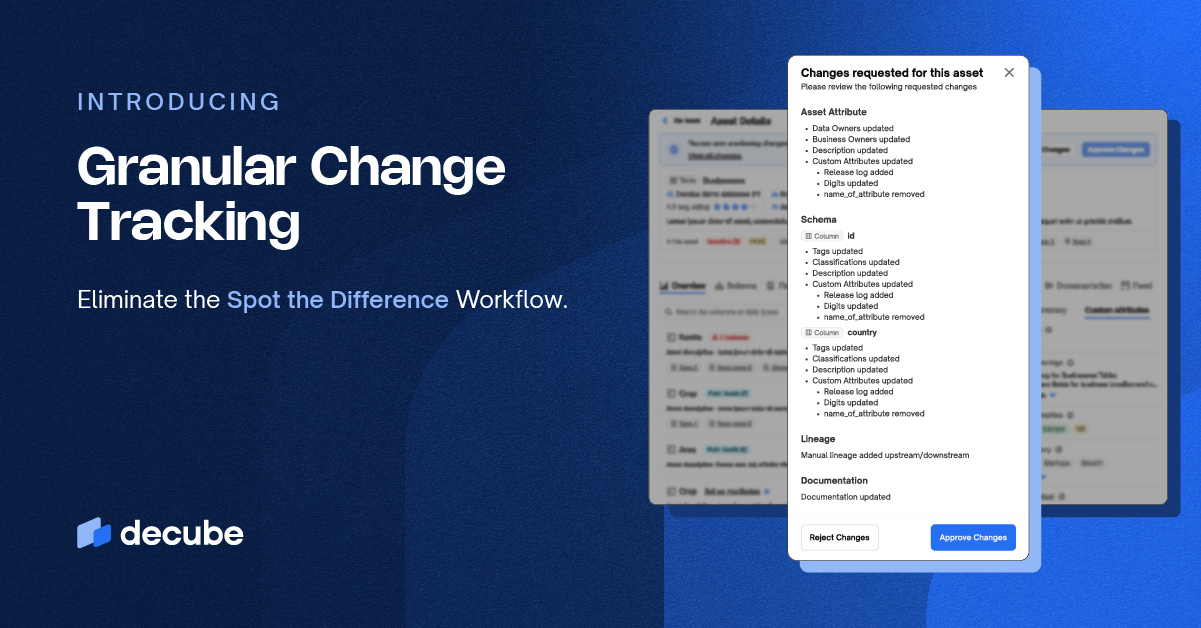

Data Catalog (Enhanced with Column Lineage and Approval Flow): Decube documents all data assets with enhanced column lineage and an embedded approval flow for accurate verification and cataloging. It further improves traceability of data transformations and approvals, ensuring all stakeholders access reliable and approved data insights.

Comprehensive platform: Decube offers integrated platforms or services that encompass various data observability capabilities, including monitoring, logging, tracing, alerting, and analytics. These platforms provide a centralized interface for monitoring various data-related metrics, events, and logs.

Managed services: Decube manages the underlying infrastructure, software deployment, configuration, maintenance, and updates, relieving you of the burden of managing these tasks internally. Managed services ensure that the data observability platform remains up-to-date and secure without requiring significant internal resources.

Security and Compliance: Decube is ISO27001 and SOC2 certified, and it’s focused on protecting the organization's data assets and ensuring regulatory compliance.

Limitation of Decube-Managed Data Observability

Getting a managed solution like Decube comes with its own cost, and it’s definitely not cheaper. If your company deals with fewer (‘00) tables, then we recommend going for manual or open-source tools.

Some large companies, like Netflix and Airbnb, with extraordinarily large data sizes and custom tooling, may opt for a home-grown solution that meets their requirements.

Companies with a decent amount of data should go with managed solutions since the cost of the tool will justify the investment.

The savings are definitely witnessed by the amount of time the data engineering team saves and the productivity of reduced errors during the data pipeline.

Final Thoughts

Choosing between open-source data observability and vendor-managed data observability depends entirely upon your business needs and requirements. While open-source tools are mostly free to use, vendor-managed data observability gives you the liberty to fully utilize the expertise of third parties to make the most out of your data.

When choosing open-source data observability tools and practices for your business, you can enhance your data management processes, improve decision-making, and boost a culture of accountability and trust.

However, also understand the complexities involved, including privacy concerns, data security issues, and the need for robust governance frameworks.

And if you'd like an expert's help, get in touch with Decube.

_For%20light%20backgrounds.svg)