Kindly fill up the following to try out our sandbox experience. We will get back to you at the earliest.

Data Lake vs Data Warehouse: Key Differences for Your Needs

Understand the difference between data lakes and data warehouses: storage, processing, structure, and use cases simplified.

Data Lake vs Data Warehouse: Key Differences and What is Best For You

Data has become a critical asset for businesses of all sizes and industries. In fact, according to the latest estimates, we create 328.77 million terabytes of data every day - a number so large that it's difficult to comprehend. This exponential data growth has created a significant challenge for organizations to manage and analyze it effectively. "Storing data is like buying clothes. You want to have enough options to choose from, but having too much can make it difficult to find what you need and may even be overwhelming."

The ability to effectively manage data is crucial for businesses to identify insights and make informed decisions. As McKinsey & Company notes, companies that leverage data effectively can increase their operating margins by up to 60%. However, this requires the proper infrastructure and tools to manage and analyze data. That's where data warehouses and data lakes come in. These technologies offer powerful ways to store and analyze data, but they differ significantly.

Today's blog will explore the differences between data warehouses and data lakes and help you determine which one is right for your business.

Jump to:

Structured vs Unstructured data

Key differences - Warehouse and Data Lake

What is a Data Warehouse?

A data warehouse is a centralized repository in which an organization stores all its data in a structured format, such as tables, with a specific schema or blueprint. This structure allows data to be easily queried and analyzed for business intelligence and decision-making.

Data warehouses are designed to handle large amounts of structured data, such as sales figures, customer information, and financial data. They typically require a defined schema, a blueprint specifying how data is organized and related to one another. The schema helps ensure data quality and consistency across the organization, making it easier for analysts and decision-makers to work with the data.

Data warehouses often use ETL (extract, transform, load) to get data from various sources into the warehouse. ETL involves:

- Extracting data from the source systems.

- Transforming it into the required format.

- Loading it into the data warehouse.

Once the data is loaded, it can be easily queried and analyzed using tools such as SQL and business intelligence software.

Think of a data warehouse as a giant filing cabinet for data, where each piece of information is organized and labeled to make it easy to find and use.

What is a Data Lake?

A data lake is a large, centralized repository where an organization stores all of its data in raw, unstructured, or semi-structured form without any predefined schema or organization.

Data lakes are designed to handle massive amounts of raw data, such as social media posts, website clickstream data, and machine-generated log files. Unlike data warehouses, data lakes do not require a predefined schema so that data can be stored in any format and easily retrieved.

Data lakes are often used for exploratory or big data analytics purposes, where data scientists and analysts can explore and experiment with the data to identify patterns, trends, and insights. Data lakes allow organizations to discover new opportunities, such as product recommendations, customer behavior patterns, and market trends, that they may not have otherwise found with a structured data approach.

Data lakes also use ELT (extract, load, transform) to get data from various sources into the lake. The only difference being data is in its raw form. Once the data is loaded, it can be transformed and analyzed using tools such as Apache Spark and Hadoop.

Think of a data lake as a big pool of unfiltered data where everything is dumped in one place without any prior organization.

Why Data Management?

Data management is crucial for organizations because it allows them to effectively use and analyze their data to gain insights, make informed decisions, and stay ahead of the competition. Let's take a look at some real-life examples that illustrate the importance of data management:

- Customer Relationship Management (CRM): A company's customer database is one of its most valuable assets. Effective data management practices can maintain accurate and up-to-date customer records, allowing organizations to better understand customers' needs, preferences, and behavior. This data can be used to tailor marketing campaigns, improve customer service, and ultimately drive sales.

- Fraud Detection: Fraud is a major issue for many industries, including banking, insurance, and healthcare. Effective data management practices can help organizations detect and prevent fraudulent activity by analyzing large amounts of data to identify patterns and anomalies. For example, a credit card company may use data management to analyze customer transactions for unusual spending patterns or transactions made in locations far from the customer's usual location.

- Healthcare Analytics: Healthcare organizations collect vast amounts of data, including patient records, medical imaging, and clinical trial data. Effective data management practices can help these organizations use this data to improve patient outcomes, develop new treatments, and advance medical research.

Data Management is to make sense of the data generated and stored and ultimately drive innovations through it.

Structured vs. Unstructured Data

Before deciding which Data warehouse or data lake is better for your organizational needs, it is essential to understand its key element, i.e., data.

- Structured data is highly organized and easily searchable, while unstructured data is typically more complex and harder to search and analyze. Structured data is data that follows a predefined format or schema, such as a relational database. In contrast, unstructured data has no predefined design or structure, such as images, videos, and text documents.

- Structured data is typically easier to analyze because it can be easily searched, sorted, and analyzed using traditional SQL-based tools. Structured data can also be easily integrated with other systems and applications, making it ideal for business intelligence and reporting.

- Unstructured data, on the other hand, is typically harder to analyze because it does not have a predefined structure or format. Unstructured data can include a wide range of data types, such as audio, video, text, and images, and may require specialized tools and techniques to analyze. This is because unstructured data is often generated from sources such as social media, sensors, or other machine-generated sources, where the data is not structured or organized in any particular way.

However, despite its complexity, unstructured data can provide valuable insights that structured data cannot. For example, sentiment analysis of social media data can help organizations understand how customers feel about their products or services. Image recognition technology can identify objects and patterns in images and videos, allowing organizations to gain insights that would be difficult or impossible to obtain from structured data alone.

Key Differences Between Data Warehouses and Data Lakes

Now that we know the key element very clearly, it would be easier to analyze the key differences between Data Warehouse and Data Lakes, from their structure to their use case.

- Data Structure: The most significant difference between data warehouses and data lakes is their data structure. A data warehouse is structured, meaning the data is organized into tables, columns, and rows with a defined schema. In contrast, a data lake is unstructured, meaning the data is stored in its raw form without a predefined schema or organization.

- Data Storage: Data warehouses are optimized for storing structured data that has been cleaned, transformed, and organized for analysis. They typically store data in a compressed and optimized format, which makes it easier to query and analyze. Data lakes, on the other hand, store all data in its raw form, regardless of its structure or format.

- Data Processing: Data warehouses are designed for efficient and fast query processing and typically support structured SQL queries. They are optimized for reporting and analysis, with pre-aggregated data that can be easily queried for insights. Data lakes, on the other hand, are designed for batch processing of large amounts of raw data. They can handle a wide variety of data types and formats and are often used for exploratory or big data analytics, where data scientists and analysts can explore and experiment with the data to identify patterns, trends, and insights.

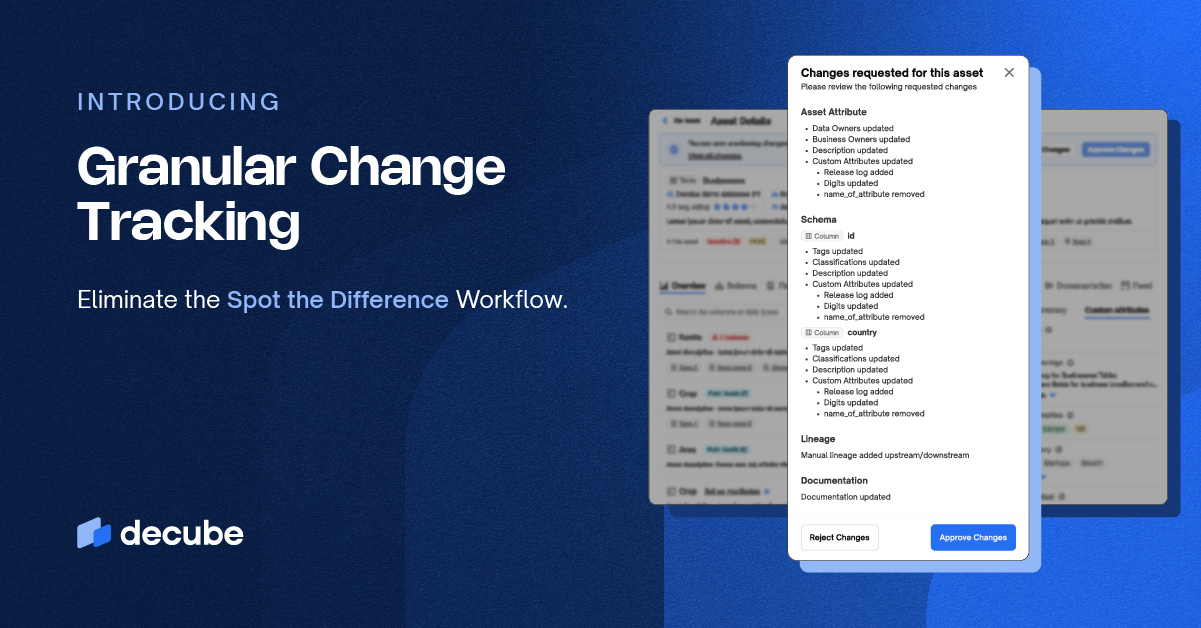

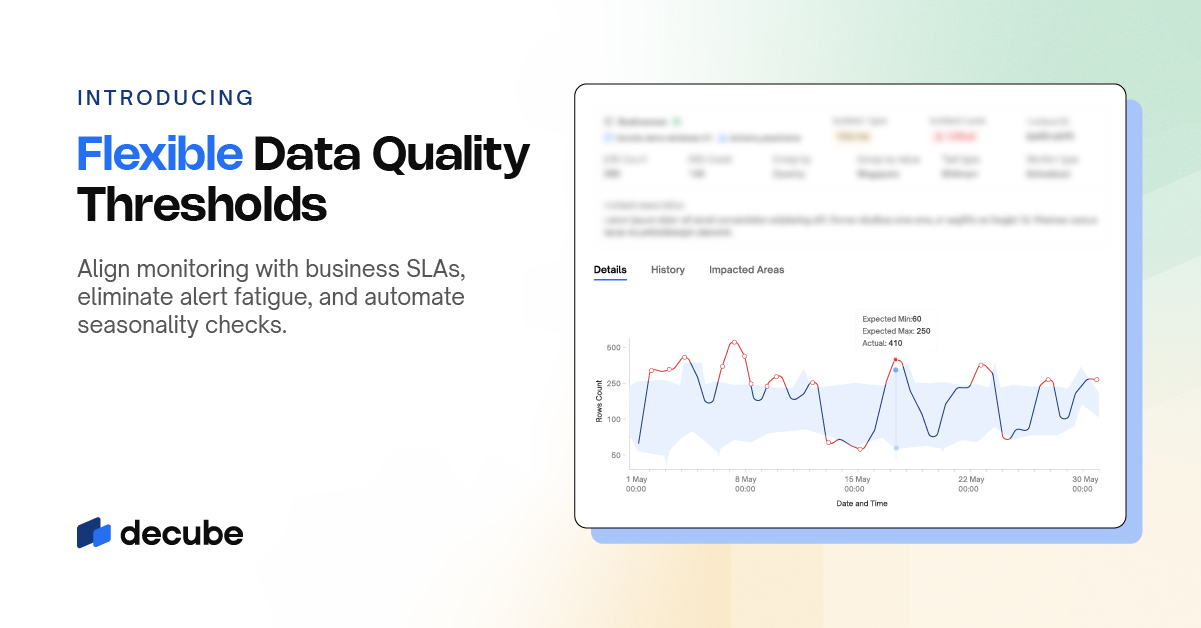

- Data Governance: Data warehouses have strict data governance policies, which ensure that the data is accurate, consistent, and secure, including data quality checks, data lineage tracking, and access controls. Data lakes, on the other hand, are more flexible and do not have the same level of governance. They are often used for experimental and exploratory data analysis, where the data is not yet fully understood, and data quality may be lower.

- Use Cases: Data warehouses are typically used for structured data analysis, such as business intelligence reporting, financial analysis, and performance monitoring. They are well-suited for answering predefined questions with structured data. Data lakes, on the other hand, are used for big data analytics and exploratory analysis. They are well-suited for discovering new insights and opportunities, such as customer behavior patterns, product recommendations, and market trends, that may not have been previously found with structured data.

Which One is Right for You?

When it comes to choosing between a data warehouse and a data lake, the decision ultimately depends on your specific business needs and data requirements. Let us see some points to consider when deciding which one is right for you:

1. Data Structure and Variety: A data warehouse may be the best choice if your data is highly structured, with a well-defined schema and clearly defined data types. Data warehouses are optimized for storing structured data and are designed to support efficient and fast query processing for structured data analysis.

If your data is highly unstructured, with a wide variety of data types, including images, videos, and text documents, a data lake may be the best choice. Data lakes are designed to store and process large amounts of unstructured and semi-structured data, making them ideal for big data analytics and exploratory analysis.

2. Data Processing and Analysis Requirements: If you require fast and efficient processing of large amounts of structured data, a data warehouse may be your best choice. Data warehouses are optimized for quick query processing and can quickly process and analyze large amounts of structured data.

If you need to perform exploratory analysis and discover new insights from unstructured or semi-structured data, a data lake may be your best choice. Data lakes provide a flexible and scalable platform for data scientists and analysts to explore and experiment with data, allowing them to discover new patterns and insights.

3. Data Governance and Scalability: If you require strict data governance policies to ensure the accuracy, consistency, and security of your data, a data warehouse may be the best choice for you. Data warehouses have well-established governance policies, including data quality checks, lineage tracking, and access controls, to ensure that data is secure and accurate.

Suppose you require scalability and the ability to quickly add new data sources or scale your storage and processing capabilities as your business grows. In that case, a data lake may be your best choice. Data lakes are designed to be highly scalable, allowing you to add new data sources and scale up or down as needed.

4. Data Volume: Another key consideration is the volume of data you need to store and process. A data warehouse may be sufficient for your needs if you're dealing with a relatively small amount of data.

However, if you are dealing with large amounts of data, a data lake may be a better choice due to its scalability and ability to handle large volumes of data.

5. Skillsets: The skillsets of your data team are another consideration when choosing between a data warehouse and a data lake. If your team has more experience with structured data analysis and SQL, a data warehouse may be the better choice.

However, a data lake may be the better option if your team has more experience with big data technologies and unstructured data analysis.

Ultimately, the right choice will depend on your data management requirements and the insights you need to derive from your data.

The Future of Data Management: The Hybrid Approach

It is important to note that the future of data management or the choice between a data warehouse and a data lake is not a binary decision. But it lies in a hybrid approach that combines the strengths of both data warehouses and data lakes. This approach allows organizations to leverage the best of both worlds, enabling them to store and analyze both structured and unstructured data in a unified and integrated manner.

By using a hybrid approach, organizations can take advantage of the scalability, cost-effectiveness, and flexibility of data lakes while leveraging the governance, security, and reliability of data warehouses. This enables organizations to derive more value from their data and make better-informed decisions.

In addition to a hybrid approach, the future of data management will be shaped by advancements in artificial intelligence (AI), machine learning (ML), and cloud computing. These technologies will enable organizations to extract even greater insights and value from their data, enabling them to stay ahead of the competition and drive innovation.

We need to wait and see what technology offers us next!

_For%20light%20backgrounds.svg)