Kindly fill up the following to try out our sandbox experience. We will get back to you at the earliest.

Automated Data Lineage: Key Benefits & Tools Evaluation Guide for 2024

Discover how automated data lineage enhances data quality, compliance, and governance. This guide covers the top tools for 2024, helping you choose the right solution to streamline data management and improve decision-making.

In AI world, knowing where data comes from is crucial. Automated data lineage is a game-changer, making it easier for companies to manage their data. Jane, a data analyst, found it greatly improved her team's work and opened up new possibilities.

Jane loved working with data but hated the old way of tracking its path. Then, an automated data lineage tool changed everything. It made all data connections and changes clear, helping Jane and her team make better choices.

This article explores the benefits of automated data lineage. It gives you the tools and knowledge to use it in your company. Whether you're experienced or new to data work, this guide will help you understand and use data management better.

Key Takeaways

- Automated data lineage improves data quality and accuracy by showing where data comes from.

- It helps meet compliance and reporting needs by making data traceable and transparent.

- It makes data management easier with a single place for data cataloging and metadata.

- It speeds up finding and fixing problems, helping teams make quicker decisions.

- It encourages teamwork by sharing knowledge of data origins and changes.

Understanding Automated Data Lineage

In today's digital world, companies are creating and storing huge amounts of data. Managing this data well is key for making smart decisions, following rules, and running smoothly. Automated data lineage plays a big role here.

Automated data lineage tracks and documents data as it moves through an organization. It shows how data changes and is used in different places. This helps businesses understand their data governance, data mapping, and metadata management better.

At the core of automated data lineage is metadata management. Metadata is "data about data," like where it comes from and how it's used. Tools that manage metadata help show how data moves and connects.

Data provenance, or where data comes from, is also important. It helps keep data trustworthy and accurate. It also helps find problems or issues fast.

Automated data lineage helps companies understand their data better. It improves how they manage data and makes decisions based on data. This technology helps businesses use their data more effectively.

https://youtube.com/watch?v=8wGUnXhISz0

Key Benefits of Automated Data Lineage

Automated data lineage brings many benefits to organizations. It improves data quality, accuracy, and compliance. It also makes data governance easier. Let's look at the main advantages of using automated data lineage.

Enhanced Data Quality and Accuracy

Automated data lineage shows where data comes from and how it changes. This helps find and fix data quality problems. It makes sure the data is accurate and reliable.

Improved Compliance and Regulatory Reporting

Today, data rules are stricter than ever. Automated data lineage helps meet these rules by keeping a detailed record of data activities. This makes it easy to report and show good data practices.

Streamlined Data Governance

Automated data lineage gives a clear view of data and who owns it. This helps data stewards make better decisions. It ensures everyone is accountable for data.

Accelerated Troubleshooting and Impact Analysis

Automated data lineage speeds up finding and fixing problems. It shows how data is connected, making it easy to find the source of issues. This reduces downtime and keeps business running smoothly.

Enhanced Collaboration Across Teams

Automated data lineage helps teams work better together. Everyone understands the data and its connections. This makes teamwork more effective and confident in making decisions.

Scalability and Efficiency

As data grows, automated data lineage keeps up. It saves time and resources. This lets businesses focus on insights and strategy.

Evaluating Automated Data Lineage Tools: A Guide

Choosing the right automated data lineage tools is key for better data quality and compliance. It's important to look at various features and criteria. This ensures the tool fits your specific needs.

Key Features to Consider

- Data mapping: Check if the tool can automatically map data flows and dependencies in your data ecosystem.

- Metadata management: See how well the tool catalogs, organizes, and manages metadata. This is crucial for keeping data context and lineage.

- Data cataloging: Look for tools with strong data cataloging features. They should help you discover, understand, and manage your data assets easily.

- Scalability: Make sure the tool can handle your data's volume, velocity, and variety. It should support your future data management needs.

Criteria for Tool Selection

When looking at automated data lineage tools, consider these key criteria:

- Functionality: Check if the tool offers full data lineage. This includes end-to-end visibility, impact analysis, and finding the root cause.

- Integration: See if the tool integrates well with your current data infrastructure. This includes data sources, warehouses, and other systems.

- User experience: Choose tools with an easy-to-use interface. This lets your team quickly use the data lineage features.

- Scalability and performance: Make sure the tool can grow with your data. It should provide reliable and efficient data lineage tracing.

- Vendor support and ecosystem: Look at the vendor's reputation, customer feedback, and support resources. This helps ensure you get the help you need.

By carefully evaluating automated data lineage tools, you can make the best choice. This choice will help improve your data management.

Top Automated Data Lineage Tools to Consider

Organizations today need to understand their data better. This is why data lineage tools are so important. We'll look at some top tools that can help manage your data better.

1. Atlan

Atlan is a comprehensive data governance platform known for its strong data lineage capabilities. It provides a holistic view of data flows while leveraging AI to deliver meaningful insights. Atlan’s collaborative interface allows both technical and non-technical teams to engage with data more effectively, improving decision-making processes.

Key Features:

- AI-powered insights for enhanced data management and governance.

- End-to-end data lineage visualization to track data transformations.

- Seamless collaboration between data stewards, analysts, and business users.

Best For:

Organizations looking for a flexible, AI-driven platform that simplifies data governance and supports cross-functional collaboration.

2. Decube

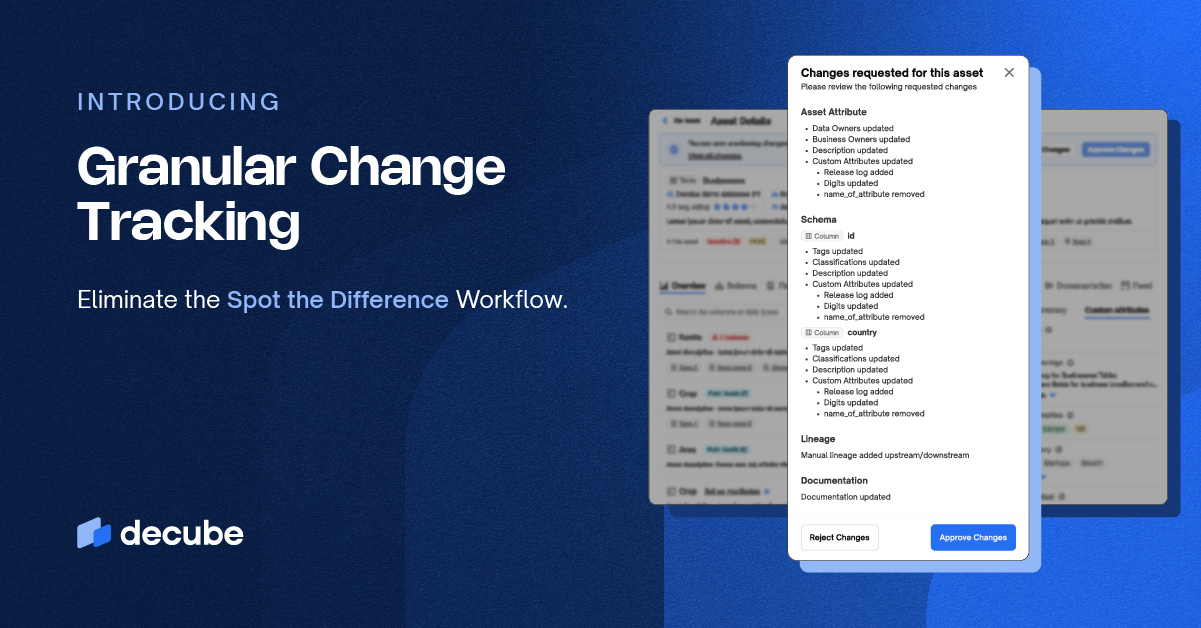

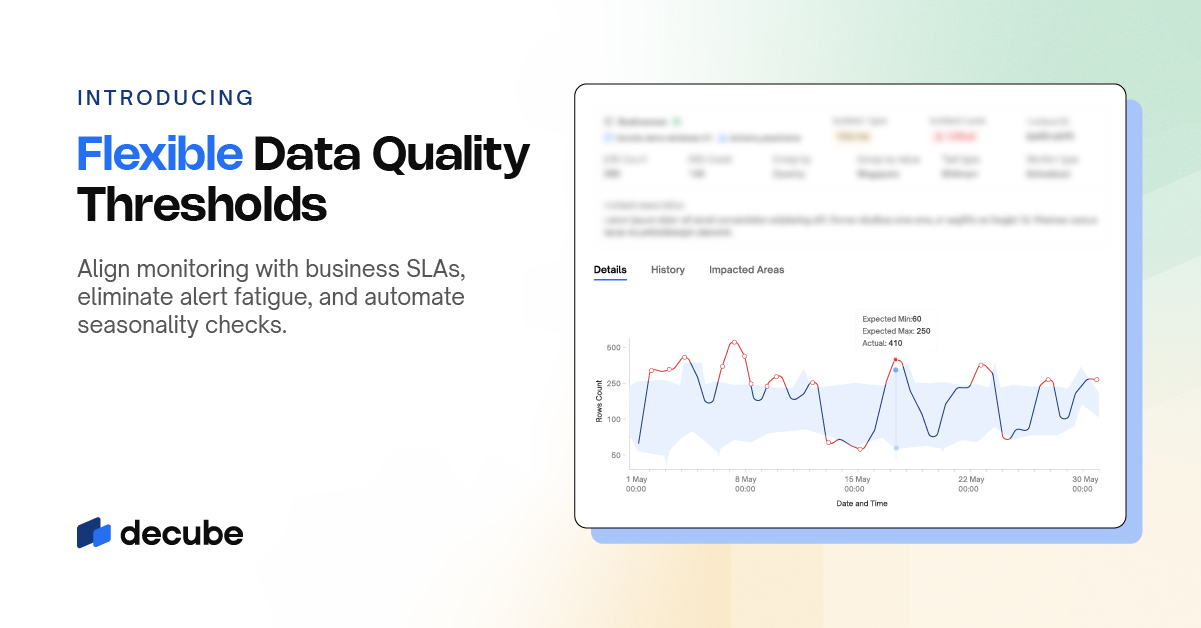

Decube is a standout among data lineage tools, particularly for organizations with intricate data environments. It offers a cloud-native solution that automates the discovery and mapping of data flows, making it easier for businesses to gain real-time visibility into how data is used across systems. Decube’s focus on automation and scalability makes it ideal for companies of all sizes, including enterprises dealing with large-scale data governance needs.

Key Features:

- Automated Data Discovery & Lineage Mapping: Decube scans and catalogs all data assets across the organization, offering a real-time view of data flows and transformations.

- Customizable Governance Frameworks: The platform allows users to build and tailor governance policies to meet specific organizational and regulatory requirements, ensuring compliance with laws such as GDPR, HIPAA, and CCPA.

- Scalable Architecture: Decube is built to grow with your organization, handling increasing data volumes without compromising performance.

- Comprehensive Dashboards: Real-time analytics and dashboards provide insights into data quality and governance, allowing teams to make informed decisions quickly.

Best For:

Organizations needing a scalable, automated solution for data lineage and governance, especially those in industries like finance, healthcare, and e-commerce that require stringent compliance measures.

3. Secoda

Secoda is a powerful tool that focuses on data discovery and cataloging while also providing automated data lineage. It helps users create a clear, unified view of their data by connecting various sources and visualizing data movements across the organization. Secoda’s intuitive design makes it accessible to a wide range of users, from data scientists to business analysts.

Key Features:

- Automatic data lineage mapping across diverse data sources.

- Integrated data cataloging for better data discovery and organization.

- User-friendly interface that simplifies data management for non-technical users.

Best For:

Teams looking for a simple, yet powerful solution for connecting multiple data sources and visualizing their data lineage to improve collaboration and governance.

4. DataGalaxy

DataGalaxy is a data intelligence platform that excels in providing clear, AI-driven data lineage tracking. It maps the journey of data from its origin to its destination, helping businesses manage data quality and regulatory compliance more efficiently. DataGalaxy’s AI engine automates much of the lineage process, making it a reliable choice for organizations that need real-time insights into their data flows.

Key Features:

- AI-powered data lineage tracking to visualize complex data transformations.

- Tools to monitor and improve data quality across multiple systems.

- Detailed reporting features that support compliance with regulations like GDPR and CCPA.

Best For:

Enterprises requiring advanced data lineage capabilities, especially those needing AI-powered solutions to monitor data quality and improve compliance reporting.

5. MANTA

MANTA is one of the most comprehensive data lineage tools available, providing a full overview of data pipelines from start to finish. It helps organizations maintain data quality and governance by visualizing data flows in detail. MANTA is especially useful for companies needing to ensure transparency in data processes for regulatory purposes.

Key Features:

- Full data pipeline visibility with detailed lineage tracking.

- Advanced governance tools to ensure compliance with industry standards.

- Integration with multiple data systems for a seamless data lineage view.

Best For:

Organizations looking for a robust solution to track and analyze data pipelines in real-time, ensuring data transparency and compliance.

These top tools offer diverse features and capabilities that help organizations manage their data lineage, improve data governance, and ensure compliance with industry standards. By evaluating your specific needs, you can choose the tool that best supports your data management strategy.

Best Practices for Implementing Automated Data Lineage

Best Practices for Implementing Automated Data Lineage

Successfully implementing automated data lineage requires a well-structured approach. Here are key best practices to ensure a smooth and effective rollout:

- Establish a Solid Data Governance Framework

Begin by developing a robust data governance framework aligned with your organization’s data management objectives. This framework should clearly define policies, processes, and standards for data governance. Additionally, identify data ownership and assign clear responsibilities to ensure accountability across the organization. - Prioritize Data Quality and Traceability

Maintaining high levels of data quality and traceability is essential for effective data lineage. Regularly monitor and validate your data to ensure accuracy, consistency, and reliability. Implement automated checks and audits to catch discrepancies early, helping your organization stay compliant with regulatory requirements. - Foster Cross-Functional Collaboration

Automated data lineage works best when teams across departments collaborate. Encourage knowledge sharing between data stewards, IT teams, and business users to promote a unified understanding of data flows. Providing training and resources ensures all stakeholders are equipped to manage and utilize data lineage effectively. - Provide Ongoing Training and Support

For a successful adoption, invest in training programs to familiarize all relevant teams with the data lineage tools and their role in the process. Ensure continuous support, so team members can stay updated on best practices and use the tools efficiently as the organization's data landscape evolves.

By following these practices, organizations can leverage automated data lineage to improve data governance, ensure compliance, and enhance decision-making.

FAQ

1. What are the key benefits of automated data lineage?

It boosts data quality and accuracy. It also helps with compliance and reporting. Plus, it makes data governance easier and speeds up problem-solving.

2.How does automated data lineage improve data quality and accuracy?

It shows where data comes from and how it changes. This helps find and fix data problems. It makes data trustworthy for better decisions.

3.How can automated data lineage enhance compliance and regulatory reporting?

It tracks data flow, which is key for following rules. It makes audits easier and proves data's history to regulators.

4.What role does automated data lineage play in data governance?

It's crucial for managing data well. It helps understand and control data, making decisions easier and more efficient.

5.How can automated data lineage accelerate troubleshooting and impact analysis?

It shows data connections clearly. This helps find problems fast and understand their effects. It guides better data decisions.

6. How does automated data lineage improve collaboration across teams?

It creates a shared understanding of data. This makes it easier for teams to work together and make decisions.

7. How does Decube enhance automated data lineage for better data governance?

Decube automates data flow tracking, offering real-time visibility into data movement and transformations. Its automated lineage mapping ensures data traceability, integrity, and compliance, while customizable governance frameworks help organizations manage data more efficiently and meet regulatory requirements with less manual effort.

_For%20light%20backgrounds.svg)