Kindly fill up the following to try out our sandbox experience. We will get back to you at the earliest.

Data Catalog: What is it? Definitions, Example, Importance and Benefits

Unlock the potential of your data assets with a data catalog – the key to efficient data discovery, governance, and management.

.webp)

Data Catalog Concept

Image credits: Photo by Ed Robertson on Unsplash

A data catalog is an essential tool for efficient data management, assisting firms in successfully organizing, discovering, and managing their data assets. It provides a consolidated inventory of data assets, utilizing metadata and data management technologies to allow users to readily locate the data they require for analytical and business applications. A data catalog is a collection of organized and unstructured data, reports, visualizations, machine learning models, and other tools that provide a comprehensive perspective of an organization's data.

Key Takeaways:

- A data catalog helps organizations effectively manage and organize their data assets.

- Metadata plays a crucial role in describing and summarizing data assets within a data catalog.

- Using a data catalog brings numerous benefits, including improved data efficiency, increased operational efficiency, reduced risk, and better data analysis.

- Data catalogs have evolved to cater to the changing needs of organizations in the digital age.

- Implementing a data catalog brings significant changes to data management and analysis processes, increasing data efficiency and enabling better outcomes.

What is Metadata?

Metadata is data that provides information about other data. In the context of a data catalog, metadata describes or summarizes the data assets, making it easier to locate, evaluate, and understand. There are three types of metadata: technical metadata, which describes how the data is organized and structured; process metadata, which describes the creation, access, and usage history of the data asset; and business metadata, which describes the business value, fitness for purpose, and regulatory compliance of the data asset.

Metadata plays a crucial role in data analysis, data governance, and data quality management. It provides valuable insights into the characteristics and properties of data assets, enabling data professionals to make informed decisions about their usage. Technical metadata helps understand the technical aspects of data, such as data format, schema, and storage location. Process metadataprovides visibility into the data's lifecycle, including its creation, modification, and consumption. Business metadata adds a business context to the data, allowing users to assess its relevance and applicability to specific use cases. By leveraging metadata, organizations can ensure data accuracy, compliance, and usability, leading to more effective data-driven decision-making.

"Metadata is the backbone of a data catalog, enabling users to search, evaluate, and utilize data assets with confidence and efficiency."

For example, imagine a data catalog containing customer data for a retail company. The technical metadata may include information about the data source, data format, and any transformations applied to the data. Process metadata could track when the data was collected, who accessed it, and how it was used. Business metadata might include customer segmentation information or compliance-related tags indicating whether the data is subject to specific regulations. By having this metadata available in the data catalog, data professionals can quickly find the relevant customer data for their analysis or reporting needs.

Overall, metadata serves as a vital component in the organization, discovery, and management of data assets. Its inclusion in a data catalog enhances data understanding, facilitates data governance processes, and improves overall data quality and lineage tracking within an organization.

Data Catalog Features and Capabilities

A data catalog offers several features and capabilities that revolve around metadata management. These features enable efficient data discovery, evaluation, and access, as well as streamlined data curation and automated discovery. Leveraging AI and machine learning technologies, a data catalog utilizes advanced algorithms to optimize dataset searching and evaluation.

Automated Dataset Discovery

A data catalog automates the process of discovering datasets, both during the initial catalog build and for ongoing updates. This feature saves time and resources by automatically identifying and adding new datasets to the catalog, ensuring it remains up-to-date.

AI and Machine Learning

Powered by AI and machine learning, a data catalog enhances metadata management by collecting valuable information about the data assets and tagging them accurately. These technologies enable efficient categorization, classification, and enrichment of metadata, making it easier for users to discover and evaluate datasets.

Dataset Searching

A data catalog provides robust dataset searching capabilities, allowing users to find relevant datasets based on various criteria such as facets, keywords, and business terms. This feature simplifies the process of locating specific datasets, saving time and improving data accessibility.

Dataset Evaluation

Dataset evaluation is an important feature of a data catalog. It enables users to preview datasets, access metadata, review ratings and quality information, and assess the suitability of datasets for their specific needs. This functionality facilitates informed decision-making and ensures the use of high-quality data.

Data Access and Integration

A data catalog seamlessly integrates with various data access technologies, making it easy for users to access the datasets they need. This feature ensures smooth data integration and enables efficient data analysis, fostering better insights and informed decision-making.

Data Curation and Governance Support

In addition to enhancing data discovery and access, a data catalog facilitates data curation and collaborative data management. It provides features that enable users to curate and annotate datasets, track data usage, and support data governance initiatives. These capabilities contribute to improved data quality and effective data management processes.

With its rich set of features and capabilities, a data catalog serves as a valuable tool for managing metadata, facilitating efficient data discovery and evaluation, and enabling seamless data access. By harnessing the power of AI and machine learning, a data catalog empowers organizations with comprehensive data cataloging tools that enhance their data management and analysis capabilities.

Benefits of Using a Data Catalog

Using a data catalog brings numerous benefits to organizations, enabling them to optimize their data management and analysis processes.

- Data Efficiency: A data catalog allows users to easily find the data they need, avoiding duplication and saving time and effort in searching for relevant datasets. This improves data integration and ensures data is utilized more efficiently.

- Data Context: By providing detailed information and insights about datasets, a data catalog gives users valuable context that enhances decision-making and analysis. It helps users understand the content and characteristics of the data, leading to more accurate and informed interpretations.

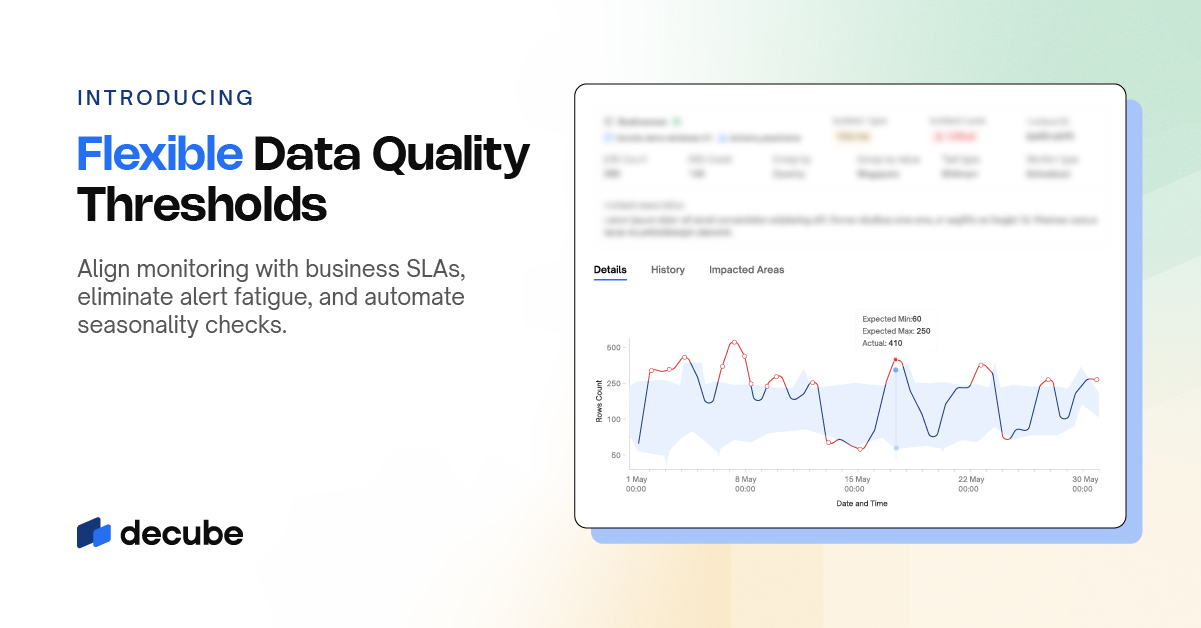

- Reduced Risk of Error: With a data catalog, organizations can mitigate the risk of errors in data analysis. By ensuring that users have access to accurate and up-to-date data, a data catalog helps maintain data integrity and reliability.

- Improved Data Analysis: The comprehensive metadata provided by a data catalog empowers users with the necessary information to perform thorough data analysis. It enhances data exploration, allows for better data evaluation, and supports the identification of relevant datasets for analysis.

- Cost Savings: Implementing a data catalog can result in cost savings for organizations. With improved data efficiency and better utilization of existing data assets, organizations can reduce unnecessary data storage costs and optimize their data infrastructure.

- Operational Efficiency: A data catalog streamlines data access and reduces the time spent on data discovery, enabling users to focus on analysis and decision-making. It facilitates a more efficient workflow and promotes collaboration among data professionals.

- Competitive Advantages: Organizations that leverage a data catalog gain a competitive edge in the market. By efficiently harnessing their data assets, organizations can make data-driven decisions faster, respond to market dynamics more effectively, and innovate with agility.

- Better Customer Experience: A data catalog enables organizations to understand their customers better and deliver personalized experiences. By accessing relevant customer data from the catalog, organizations can tailor their products, services, and marketing strategies to meet customer expectations and preferences.

- Fraud and Risk Advantage: With improved data quality, metadata management, and data governance, a data catalog helps organizations detect and mitigate potential fraud and risks in their operations. It enables proactive monitoring and early identification of suspicious activities.

By effectively utilizing a data catalog, organizations can unlock these benefits and optimize their data management, analysis, and decision-making processes.

Evolution of Data Catalogs

Data catalogs have evolved over time to meet the changing needs of organizations in the digital age. They started as digital versions of physical catalogs for books and documents, providing a convenient way to organize and access information. However, as the volume and complexity of data grew, organizations recognized the need for a centralized and efficient system to manage their data assets.

In the early stages of data catalog evolution, they primarily focused on managing data assets within databases and data warehouses. These catalogs provided a structured approach to organizing and categorizing data, making it easier for users to find and analyze the information they needed. However, with the advent of big data analytics and the proliferation of data sources, including digital librariesand cloud-based platforms, traditional data catalogs faced new challenges.

In response to these challenges, enterprise data catalogs emerged to address the evolving requirements of organizations. These catalogs expanded their capabilities beyond traditional data assets to include unstructured data, metadata management, and data exploration. They leveraged advanced technologies such as AI and machine learning to automate data discovery, metadata extraction, and data lineage tracking. With the integration of big data analytics and self-service analytics, enterprise data catalogs became powerful tools for managing and deriving insights from diverse and complex data sources.

The evolution of data catalogs also brought about the concept of digital libraries, where organizations could store and access a wide variety of data assets. Digital libraries go beyond traditional data catalogs by providing a broader range of data resources, including documents, images, videos, and more. These libraries enable users to explore and utilize various data formats and types, enhancing the scope and value of data analysis and decision-making.

Key Milestones in the Evolution of Data Catalogs:

- Transition from physical catalogs to digital catalogs for books and documents

- Inclusion of data assets within databases and data warehouses

- Expansion to embrace big data analytics and self-service analytics

- Introduction of enterprise data catalogs to manage diverse data sources

- Integration of AI and machine learning for automated data discovery and metadata management

- Emergence of digital libraries for storing and accessing a wide range of data assets

Today, modern data catalogs continue to evolve, keeping pace with technological advancements and the rapidly changing data landscape. They play a vital role in helping organizations effectively manage their data assets, streamline data analysis, and enable self-service analytics. With comprehensive features and capabilities, data catalogs empower users to extract meaningful insights and drive data-driven decision-making.

What Changes with a Data Catalog Implementation

Implementing a data catalog brings significant changes to data management and analysis processes. With a data catalog in place, data users, such as data engineers, data scientists, and data stewards, can find and access the right data more efficiently, reducing the time spent on searching and preparing data. This streamlined data access empowers users to focus on their core tasks, leading to improved productivity.

The data catalog also plays a crucial role in data analysis by providing valuable context, metadata, and data quality information. By having access to this information, data analysts and decision-makers can make more accurate and informed decisions, leading to better outcomes.

Furthermore, a data catalog enhances data governance initiatives. It provides a centralized view of data assets and their usage, making it easier to enforce data policies and ensure compliance. With improved data governance, organizations can maintain data integrity, protect sensitive information, and improve overall data security.

The implementation of a data catalog ultimately increases data efficiency within an organization. By organizing and cataloging data assets, the catalog enables data users to locate and access the data they need more quickly, eliminating redundant efforts and data duplication. This, in turn, improves data workflows and overall operational efficiency.

Benefits of a Data Catalog Implementation:

- Streamlined data access and reduced search time

- Improved data analysis with valuable context and metadata

- Enhanced data governance and compliance

- Increased data efficiency and operational productivity

"Implementing a data catalog transformed how we work with data. We no longer waste time searching for the right data, and the metadata provided by the catalog gives us the necessary context for analysis. It has truly revolutionized our data management processes." - John Smith, Data Analyst at XYZ Corporation

By implementing a data catalog, organizations can unlock the full potential of their data, enabling self-service analytics, improving data governance, and increasing overall data efficiency. It is a transformative solution that empowers data users and drives success in today's data-driven world.

User Adoption Strategies for Data Catalogs

To maximize the benefits of a data catalog, organizations need to implement effective user adoption strategies. User training and onboarding programs play a crucial role in educating users on how to effectively utilize the data catalog. By providing comprehensive training sessions and onboarding resources, organizations can ensure that users are equipped with the knowledge and skills necessary to make the most of the data catalog.

Encouraging collaboration and teamwork among users is another important aspect of user adoption. By fostering a culture of collaboration, organizations can create an environment where users actively engage with the data catalog, exchange ideas, and work together on data projects. This collaborative approach not only improves the quality of data analysis but also encourages knowledge sharing and cross-functional collaboration.

Recognizing and rewarding user contributions is also key to driving user engagement. By acknowledging the efforts and achievements of users who actively leverage the data catalog, organizations can incentivize further engagement and motivate users to continue using the platform. User recognition can take various forms, such as highlighting exceptional data projects or providing incentives for active participation.

In addition to training, collaboration, and recognition, organizations should provide workshops, tutorials, and documentation to support users in utilizing the data catalog effectively. Workshops and tutorials serve as interactive learning opportunities, allowing users to delve deeper into the functionalities and capabilities of the platform. Documentation, on the other hand, provides users with detailed instructions and reference materials that they can access at any time to enhance their understanding of the data catalog.

By implementing these user adoption strategies, organizations can create a user-centric data culture that promotes active participation in data projects and encourages users to leverage the data catalog for improved data management and analysis.

Data Catalog Use Cases

A data catalog offers a myriad of use cases that can greatly benefit organizations across various industries. Here are some key use cases where a data catalog proves to be invaluable:

1. Self-Service Analytics

A data catalog enables self-service analytics by providing users with a centralized repository of data assets. Users can easily search, evaluate, and access relevant datasets for their analysis projects, empowering them to make data-driven decisions efficiently.

2. Audit and Compliance

A data catalog supports audit and compliance efforts by providing detailed data lineage, provenance, and metadata tracking. This enables organizations to ensure data accuracy, trace data sources, and satisfy regulatory requirements.

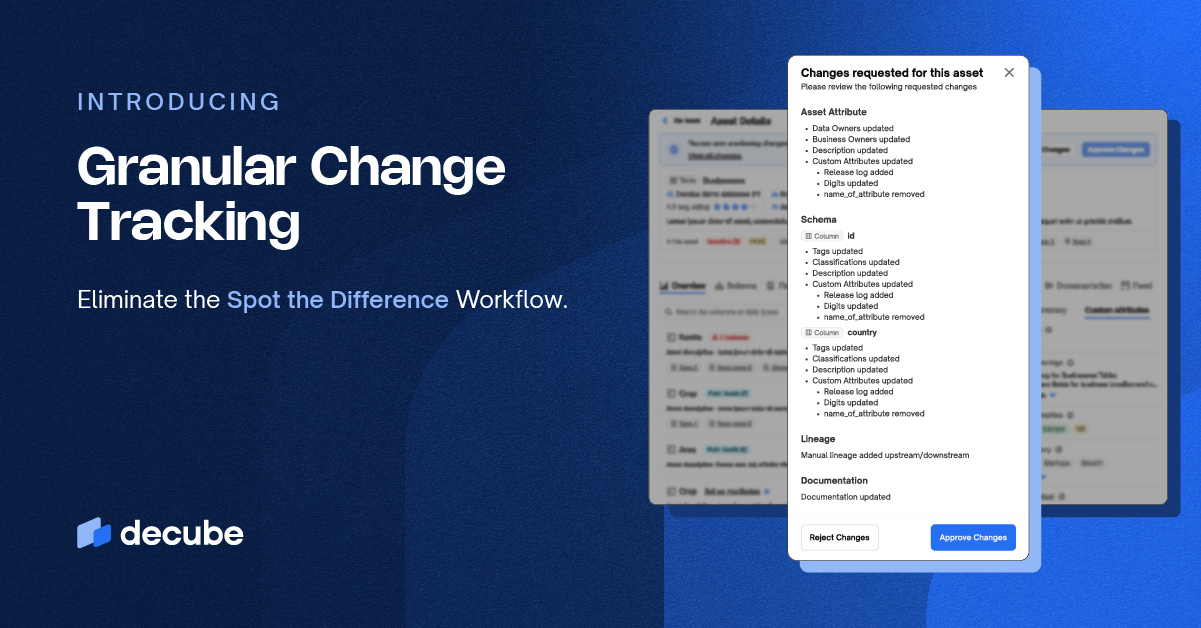

3. Change Management

Data catalogs play a crucial role in change management processes. They help users understand the impact of changes in data pipelines and systems, facilitating smoother transitions and minimizing disruptions in data operations.

4. Business Glossaries

The creation and maintenance of business glossaries are simplified with the use of a data catalog. Business glossaries provide a common vocabulary and understanding of data assets, enhancing metadata management and supporting comprehensive data governance initiatives.

5. Metadata Management

Data catalogs excel in metadata management, allowing organizations to efficiently organize, annotate, and enrich metadata. This improves data discoverability, understanding, and collaboration among data professionals.

6. Data Governance

The implementation of a data catalog strengthens data governance practices by providing a centralized and unified view of data assets. This ensures proper data stewardship, access control, and compliance with data governance policies and regulations.

7. Data Classification

Data catalogs facilitate data classification by allowing organizations to categorize and label data assets based on their sensitivity, business impact, or regulatory requirements. This supports data access control and data protection efforts.

In conclusion, a data catalog offers a range of use cases that empower organizations with self-service analytics, streamline audit and compliance processes, facilitate change management, enhance metadata management and data governance, and support data classification efforts. Leveraging the capabilities of a data catalog can bring significant value and efficiency to data-driven organizations.

What is Needed for Effective Use of a Data Catalog

To effectively utilize a data catalog, organizations must have a comprehensive understanding of metadata and its various types. Metadata serves as the backbone of a data catalog, enabling efficient data management and organization. It is essential to leverage metadata at scale to maximize the potential of a data catalog.

Metadata comes in different forms, such as technical metadata, business metadata, and operational metadata. Technical metadata describes the technical aspects of the data, including its structure and organization. Business metadata, on the other hand, provides insights into the business context, value, and compliance of the data. Lastly, operational metadata tracks the history and usage patterns of the data asset.

Organizations can leverage artificial intelligence (AI) and machine learning to automate the collection and management of metadata. By harnessing the power of AI and machine learning, data management capabilities can be enhanced, allowing for more efficient data discovery, analysis, and integration.

Metadata augmentation is a critical aspect of effective data catalog usage. Through metadata augmentation, organizations can achieve self-service data preparation, personalized data access, and role-based access control. Metadata augmentation also enables advanced capabilities such as data lineage tracking and data quality management.

To realize the full potential of a data catalog, organizations need the ability to harvest metadata from various data sources and curate it with subject matter expertise. This ensures that metadata is accurate, relevant, and up to date, allowing data professionals to make informed decisions and effectively utilize the data catalog.

Key Points:

- A comprehensive understanding of metadata is crucial for effective use of a data catalog.

- Metadata comes in various types: technical metadata, business metadata, and operational metadata.

- Leveraging AI and machine learning can enhance metadata management capabilities.

- Metadata augmentation enables advanced data management capabilities.

- The ability to harvest and curate metadata is essential for maximizing the potential of a data catalog.

"Metadata is the backbone of a data catalog, enabling efficient data management and organization."

Essential Features of a Data Catalog

A data catalog plays a crucial role in data management and analysis, offering a wide range of essential features that enhance its functionality and utility. These features enable organizations to efficiently search, discover, curate, and govern their data assets. Let's explore the key features that make a data catalog indispensable in today's data-driven world:

- Robust Search and Discovery: A data catalog should provide robust search capabilities, allowing users to quickly find the data they need. It should support keyword searches, technical term searches, and even business term searches to cater to diverse user requirements.

- Flexible Filtering Options: To refine search results and narrow down datasets, a data catalog should offer flexible filtering options. Users should be able to filter datasets based on various criteria such as data type, data source, date range, and more.

- Metadata Harvesting: An effective data catalog should be able to harvest metadata from various data sources, both on-premises and cloud-based. This allows for a comprehensive and unified view of metadata, facilitating better data understanding and analysis.

- Metadata Curation: Metadata curation tools within a data catalog enable subject matter experts to contribute business knowledge and enrich the metadata with annotations, classifications, and ratings. This ensures that the metadata is accurate, relevant, and up to date.

- Automation: Automation is a critical feature that saves time and effort in managing and maintaining a data catalog. With automation capabilities leveraging AI and machine learning, tasks such as metadata collection, data onboarding, and data governance can be streamlined, resulting in improved operational efficiency.

- Data Intelligence: A data catalog should incorporate data intelligence capabilities, utilizing AI and machine learning to provide insights and recommendations. This empowers users to make informed decisions, discover hidden patterns, and uncover valuable insights from their data.

- Data Onboarding: Seamless data onboarding is essential for a data catalog, enabling users to easily add new datasets and keep the catalog up to date. Automation and intelligent data onboarding processes ensure that new data assets are efficiently integrated into the catalog, enabling quick and easy access.

- Data Governance: Data governance is a fundamental aspect of data management, and a data catalog should support data governance practices. It should provide capabilities for data lineage tracking, data access control, and compliance with data regulations, ensuring data governance requirements are met.

- Data Lineage: Tracking data lineage is crucial for understanding the origin, transformations, and usage of data. A data catalog should include data lineage capabilities, allowing users to visualize and analyze the complete data flow, ensuring data integrity and supporting data analysis.

- Data Access Control: To maintain data security and privacy, a data catalog should provide granular data access control. Role-based access control (RBAC) and data access policies enable organizations to restrict data access based on user roles and ensure data is accessed only by authorized individuals.

A data catalog that encompasses these essential features empowers organizations to effectively manage and analyze their data assets, driving informed decision-making, maximizing operational efficiency, and gaining a competitive edge in today's data-driven landscape.

With these essential features, a data catalog becomes a vital tool for organizations to efficiently manage their data assets, promote data governance, and unleash the full potential of their data-driven initiatives.

Take Advantage of a Data Catalog Solution

Leveraging a data catalog solution is crucial for efficient data management and analysis. With the vast amount of data being generated and stored by organizations, it can be challenging to effectively access, manage, and analyze this valuable resource. That's where a data catalog solution comes in.

A data catalog solution serves as a centralized platform that enables organizations to optimize data access, streamline data integration processes, and improve metadata management and curation. By implementing a data catalog solution, organizations can unlock the full potential of their data assets, gain deeper insights, and make better-informed decisions.

One of the key advantages of a data catalog solution is its ability to facilitate data management. It provides a comprehensive view of the organization's data assets, allowing for easy discovery, organization, and retrieval of data. By having a centralized catalog, data professionals can efficiently find the data they need for analysis and decision-making, saving time and resources.

In addition to data management, a data catalog solution also enhances data analysis capabilities. It enables users to evaluate and understand the quality and relevance of different datasets, making it easier to select the most appropriate data for specific analysis projects. This ensures that data analysis is based on accurate and reliable information, leading to more accurate and insightful results.

A data catalog solution is like a library for data, enabling organizations to effectively access, manage, and analyze their data assets.

Data governance is another area where a data catalog solution plays a crucial role. It provides a framework for enforcing data governance policies, ensuring that data is trustworthy, consistent, and compliant with regulatory requirements. By implementing a data catalog solution, organizations can establish and maintain data governance practices, improving data quality and ensuring data integrity.

When selecting a data catalog solution, there are a few key features and capabilities to consider. The solution should have robust metadata management capabilities, allowing for easy cataloging and organization of data assets. It should also support metadata curation, enabling subject matter experts to contribute their knowledge and annotations to the metadata.

Data integration is another important aspect to consider. The data catalog solution should seamlessly integrate with existing data systems and technologies, enabling efficient data integration and access across the organization. This ensures that users can access the data they need, regardless of where it is stored or how it is structured.

To summarize, a data catalog solution is a powerful tool that enables organizations to optimize data management, enhance data analysis, improve data governance, and streamline data integration. By leveraging a data catalog solution, organizations can take full advantage of their data assets, gain deeper insights, and make more informed decisions. Investing in the right data catalog solution with comprehensive features and capabilities is essential for maximizing the benefits of a data catalog.

Conclusion

To summarize, a data catalog is a valuable tool for effective data management, analysis, and governance. A data catalog uses metadata to organize and provide quick access to data assets, hence boosting data efficiency and decision-making. Implementing a data catalog and successful user tactics can help firms realize their data's full potential, resulting in cost savings, operational efficiency, and competitive advantages.The advantages of using a data catalog are numerous. It improves data analysis by giving context and metadata, lowering the likelihood of errors and increasing data quality. Furthermore, it provides superior data context, allowing businesses to make more educated decisions and improve consumer experiences. With the proper data catalog solution, organizations can leverage the potential of their data and achieve success in today's data-driven world.To summarize, a data catalog provides enterprises with efficient data management, analysis, and governance capabilities. It improves data efficiency, access, and analysis by organizing and utilizing metadata. Organizations can achieve data-driven success by adopting a data catalog and executing user strategies, obtaining a competitive advantage and realizing the full potential of their data assets.

If you’re looking for a data catalog solution that can help you harness the full potential of your data, Decube is definitely worth checking out! here

_For%20light%20backgrounds.svg)