Kindly fill up the following to try out our sandbox experience. We will get back to you at the earliest.

Data Catalog, Semantic Layer, and Warehouse: Core Pillars of Enterprise Management

Explore how a Data Catalog, along with a semantic layer and data warehouse, form the foundation of effective enterprise data management.

Did you know that over 73% of enterprise data is not used for analytics? In today's world, using data well is key to success. The Data Catalog, Semantic Layer, and Data Warehouse are vital for managing enterprise data.

For modern businesses, having good data governance and management systems is a must. These tools help make data clear, support smart decisions, and improve business processes.

Let's explore how each system works. The Data Catalog keeps track of data assets. The Semantic Layer makes complex data easy to understand. And the Data Warehouse stores lots of data. Knowing about these can help you see their benefits and how they work together.

Key Takeaways

- A Data Catalog organizes and tracks data assets, facilitating efficient discovery and use.

- The Semantic Layer enables simpler interaction with complex data systems through user-friendly interfaces.

- A Data Warehouse serves as the central repository for large volumes of data.

- All three components are critical for modern enterprise data management and data governance.

- Integration of these systems leads to better data visibility, informed decision-making, and streamlined business processes.

What Is a Data Warehouse?

A data warehouse is a big storage place for lots of structured data from different sources. It's key for business intelligence (BI) solutions. It can handle a lot of data and give real-time analysis.

Definition and Functions

A data warehouse is the core of data analysis. It brings together data from many places into one system. Its main tasks are:

- Data integration: It combines structured data from various databases and apps.

- Querying: It makes complex queries easier, helping to get important data.

- Reporting: It helps make detailed reports that guide decisions.

- Data mining: It finds patterns and connections in big datasets.

Key Features

Modern data warehouses have important features that make them useful:

- Scalability: They can handle more data as it grows, keeping data safe.

- Real-time analytics: They can quickly process and analyze data, helping with quick decisions.

- Integration: They work well with different data sources and systems, giving a full view of data.

- Performance optimization: They use smart techniques to make queries and data access faster.

What Is a Data Catalog?

A data catalog is like a map for your data. It helps organizations manage, find, and organize their data better. It uses metadata to make data easier to understand and find, improving how data is used across the company.

Definition and Functions

A data catalog is a detailed list of all your data and its details. It makes finding data in your company easy. The main jobs of a data catalog are:

- Data Search and Discovery: It helps users find data from different sources easily.

- Governance: It makes sure data follows rules and standards.

- Collaboration: It helps teams work together by keeping data in one place.

Key Features

Data catalogs have features that make them key for managing data today. These include:

- Automated Metadata Collection: They automatically collect and update data details, easing the work of data managers.

- User-Friendly Interfaces: Easy-to-use search and sharing tools help users get to and share data easily.

- Integration: They work well with many data tools, making the data process smoother.

These features help manage metadata well, making it easier to find and organize data. This boosts business smarts and makes things run better.

What Is a Semantic Layer?

A semantic layer is a special layer that connects complex data systems with business users. It uses common business terms and keeps data consistent. This makes data easier to understand and use for business needs.

https://youtube.com/watch?v=GoDNc274RG4

Definition and Functions

The semantic layer acts as a middleman between raw data and tools for end-users. It changes complex data into terms that are easy for business people to get. Its main jobs are:

- Providing a unified and consistent view of data across various platforms.

- Simplifying data interaction by translating technical jargon into business terminology.

- Enhancing data consistency and reliability for accurate business insights.

- Supporting user-centric data exploration and analysis.

Key Features

Good semantic layers have key features that make them work better and easier for users:

- User-Centric Design: Made for business users, offering easy data access and handling.

- Query Translation: Changes complex data queries into terms that make sense for business.

- Maintenance of a Business Glossary: Keeps all data terms and definitions the same across the company, improving data consistency and communication.

With these features, semantic layers make data easy to get to and useful. This helps in making better decisions by having consistent and reliable business terms.

Integration of Data Catalog, Semantic Layer, and Data Warehouse

Combining a data catalog, semantic layer, and data warehouse is key for a smooth data management system. These tools work together to make data processes efficient and effective. This setup makes cross-system functionality easier, cutting down on the hassle of managing different systems.

When these elements work together, they boost reporting accuracy and help in making better decisions. They also make data governance consistent. By linking a data catalog with a semantic layer and a data warehouse, organizations can make data integration easier. This simplifies finding, getting, and using data across different platforms.

This combined setup makes data workflow smoother. It helps data scientists, analysts, and business users work better together. Here are some tips for a smooth integration:

- Make sure data catalog tools fit well with your data warehouse setup.

- Use a semantic layer for uniform data definitions and relationships.

- Do regular checks to keep the system in line and working well.

Following these tips helps organizations get the most out of their data. It creates a flexible and quick-reacting data management setup.

Benefits of Integrating All Three Pillars

Using a Data Catalog, Semantic Layer, and Data Warehouse together has many benefits for businesses. One big plus is improved decision-making. It gives people easy access to accurate and current data. This way, decisions are well-informed.

Another big plus is enhanced data governance. This setup helps keep data safe and in line with rules. It makes sure data management is consistent across the company. This lowers the chance of data leaks or breaking rules.

Also, it makes data visibility better. Everyone can see and understand the company’s data clearly. This leads to better management of data, finding data quality problems, making processes smoother, and cutting down on unnecessary data.

In short, using a Data Catalog, Semantic Layer, and Data Warehouse together helps with better decision-making, stronger data governance, and clearer data visibility. It also boosts overall efficiency and helps the company succeed.

When Are They Required?

Knowing when to use a Data Warehouse, Data Catalog, and Semantic Layer is key for better data management. Each tool has its own role in meeting different business needs and situations.

Data Warehouse

A Data Warehouse is a must-have for companies that handle big data analysis. It stores lots of historical data for queries and insights.

- Large-scale data analysis: Companies like Amazon and Walmart use Data Warehouses to understand customer habits, sales patterns, and stock levels.

- Historical data storage: Banks and financial firms keep track of past transactions in Data Warehouses. This helps in spotting risks and catching fraud.

Data Catalog

A Data Catalog is vital for companies facing strict rules and needing to find and manage data well.

- Compliance requirements: Healthcare providers use Data Catalogs to follow rules like HIPAA, making sure patient data is safe and easy to get.

- Data discovery: Tech companies put Data Catalogs to work to make finding and using metadata across teams easier, boosting teamwork.

- Data governance: Schools use Data Catalogs to keep data quality high and consistent for research.

Semantic Layer

The Semantic Layer is key for making complex data easy to understand for business folks. It turns hard technical data into simple business terms, helping with better decisions.

- Enterprise analytics: Big companies like Google use the Semantic Layer to give their non-tech staff easy access to reports and dashboards.

- Business-friendly data access: Retail shops use the Semantic Layer to let marketing teams make reports without needing to know SQL.

When Are They Not Required?

In some cases, you might not need a Data Warehouse, Data Catalog, or a Semantic Layer. This is true for companies with simple data needs or those focusing on basic analytics. They might find these solutions too complex and expensive.

Data Warehouse

A Data Warehouse isn't needed for businesses with little data or simple data needs. For these companies, just querying the systems directly might be enough. If you don't need to analyze historical data or are watching your budget, there are other options to consider.

Data Catalog

Companies with easy data assets and basic data rules might not need a Data Catalog. In these cases, a simple catalog wouldn't bring much value. Instead, using metadata management in current systems could work well for basic analytics.

Semantic Layer

A Semantic Layer isn't necessary for businesses with straightforward reporting needs. If your data setup is simple, you might not need complex data layers. Using direct data access can meet your analytics goals without the extra cost.

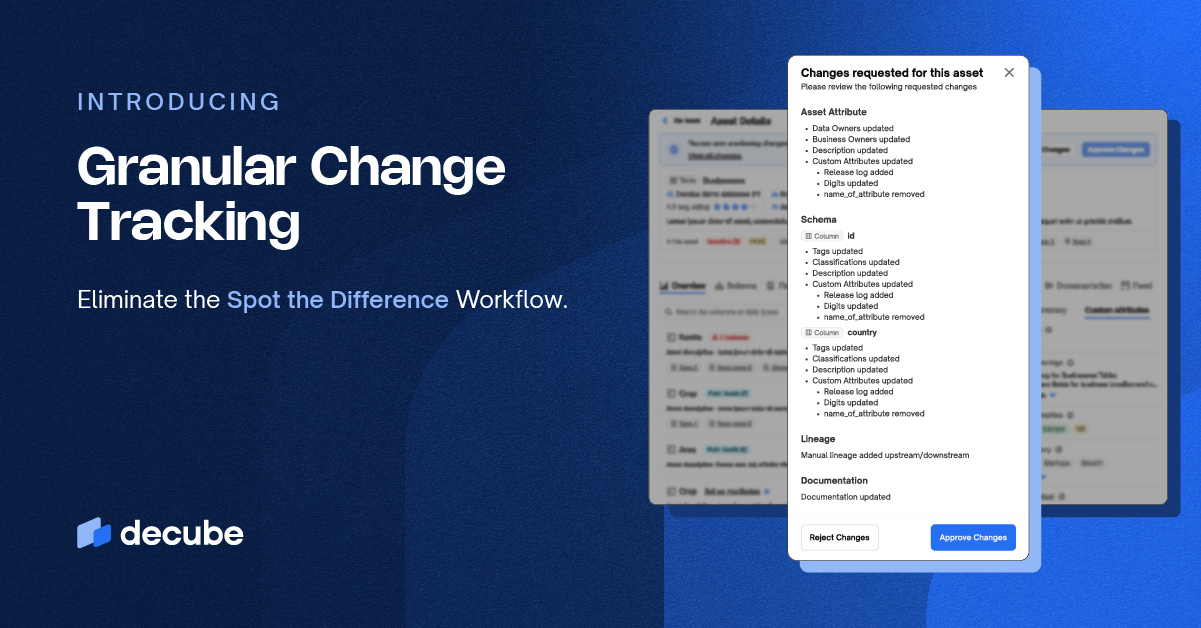

Data Catalog Solutions

Today, businesses use data more than ever to make decisions. Decube's Data Catalog is a key tool for managing data well. It helps find and manage data easily and grow with your business. This tool uses automated metadata, lets users work together, and offers AI insights for better data management.

The automated metadata harvesting in Decube's Data Catalog makes organizing data easier. It also has tools for teamwork and sharing knowledge, which some other solutions don't have. AI-driven insights give businesses a new way to find and use data.

Choosing the right data catalog is important for scalability. Decube's Data Catalog works well for both small and big companies. It can connect with many data sources, offering flexibility that others don't have.

In conclusion, knowing what Decube's Data Catalog offers can help businesses pick the best tool for their data needs. With its automated processes, teamwork tools, and AI insights, Decube leads in finding and managing data effectively.

Conclusion

Using a Data Catalog, Semantic Layer, and Data Warehouse together is key to managing data well. These tools help turn big data into useful insights. This makes a company truly data-driven.

Each tool has its own job. Data Warehouses bring data together. Data Catalogs make finding data easy. Semantic Layers help understand the data better.

This approach makes handling data easier and gives companies an edge. In today's world, data is like the new oil. Companies need tools to use data well. These systems help companies deal with complex data, leading to new ideas and better decisions.

So, using a Data Catalog, Semantic Layer, and Data Warehouse is essential. For companies to stay ahead, these tools are a must. They help in building a strong data-driven company.

FAQ

What is enterprise data management?

Enterprise data management (EDM) is about managing an organization's data well and safely. It makes sure data is correct, consistent, and easy to get to. This is key for good data management and handling data assets.

Why is data governance important?

Data governance is key for keeping data safe, true, and following rules. It sets up rules for data quality, who can see it, and how it's used. This builds trust in data for making decisions and makes data clear within the company.

What is a Data Warehouse and its functions?

A Data Warehouse stores lots of data from different places in one spot. It helps with querying, analyzing, reporting, and mining data. This supports business intelligence (BI) and helps with big data analysis for better decisions.

What are the key features of a Data Warehouse?

Important features of a Data Warehouse include handling a lot of data, supporting quick analysis, bringing together data from many sources, and storing data well. It's made to make data work better and help with detailed data analysis.

What is a Data Catalog and its functions?

A Data Catalog is a list of data assets with extra info to help manage, find, and organize data. It helps with searching for data, improving data management, and making teamwork easier by offering clear metadata and data visibility.

What are the key features of a Data Catalog?

A Data Catalog has automated metadata collection, easy search tools, collaboration features, and works well with other data systems. These help make finding data easier, organize it better, and support managing data assets well.

What is a Semantic Layer and its functions?

A Semantic Layer makes complex data easy to understand using business terms. It simplifies data and gives a clear view of it across different tools and platforms. This makes data more consistent and easier to use.

What are the key features of a Semantic Layer?

Key features of a Semantic Layer include a focus on users, translating queries, and keeping a business glossary. These help make data clear and easy to get to, keep data true and consistent, and support business-friendly data access.

How does the integration of Data Catalog, Semantic Layer, and Data Warehouse optimize data management?

Putting Data Catalog, Semantic Layer, and Data Warehouse together makes data work better, optimizes it, and gives a unified way to work across systems. It keeps data the same, makes finding and organizing data easier, and supports managing data well across the company.

What are the benefits of integrating a Data Catalog, Semantic Layer, and Data Warehouse?

Benefits include better decision-making with easy and reliable data, stronger data management and following rules, more efficient data handling, and clearer data. This approach creates a strong, data-driven company, using data well for strategic gains.

When is a Data Warehouse required?

A Data Warehouse is needed for big data analysis, storing historical data, and for complex business intelligence (BI) needs. It's key for companies that handle a lot of data from various sources to get insights and support long-term data plans.

When is a Data Catalog required?

A Data Catalog is needed for following rules, finding data, and managing data better. It's great for companies with lots of data, wanting to organize and understand it, improve metadata, and manage data assets well.

When is a Semantic Layer required?

A Semantic Layer is important for doing enterprise analytics with data that's easy for business to use. It's needed when companies want data to be the same across different tools and platforms, using common business terms.

When might a Data Warehouse not be necessary?

A Data Warehouse might not be needed for small data management or simple analytics. Companies with less complex data might find other, cheaper solutions better for their needs without needing a full Data Warehouse.

When might a Data Catalog not be necessary?

A Data Catalog might not be needed for companies with few data assets, not needing much metadata management, or in simple data environments. In these cases, simpler tools for organizing and finding data might be more practical and cost-effective.

When might a Semantic Layer not be necessary?

A Semantic Layer may not be required if data is not complex and keeping business terms consistent is not a big deal. For companies with straightforward data needs, a semantic layer might add too much complexity and cost.

What solutions does Decube offer in terms of Data Catalogs?

Decube offers a full Data Catalog solution with automated metadata collection, tools for working together, and AI insights. It helps with finding and managing data assets, growing data solutions, and working well with other data systems, making it a strong choice for organizing and optimizing enterprise data.

_For%20light%20backgrounds.svg)